Intro

Although the focus for Mixed Reality Toolkit 2 now understandably is on Microsoft's big Mixed Reality business player - HoloLens 2 - it's still perfectly doable - and viable, IMHO - to develop Windows Mixed Reality apps for WMR immersive headsets. Case in point: most of the downloads I get for my three Mixed Reality apps in the store come from people using immersive headsets - which is actually not that strange as immersive headsets are readily available for individuals whereas HoloLens (either 1 or 2) is not - and they cost 10-15% of an actual HoloLens to boot.

Although the focus for Mixed Reality Toolkit 2 now understandably is on Microsoft's big Mixed Reality business player - HoloLens 2 - it's still perfectly doable - and viable, IMHO - to develop Windows Mixed Reality apps for WMR immersive headsets. Case in point: most of the downloads I get for my three Mixed Reality apps in the store come from people using immersive headsets - which is actually not that strange as immersive headsets are readily available for individuals whereas HoloLens (either 1 or 2) is not - and they cost 10-15% of an actual HoloLens to boot.

And the fun thing is, if you do this correctly, you can even make apps that run on both - with only minor device specific code. Using MRTK2, though, there are some minor problems to overcome:

- The standard MRTK2 configuration allows for only limited use of all the controller's options

- There are no samples - or at least none I could find - that easily shows how actually extend the configurations to leverage the controller's full potential

- Ditto for samples on how to intercept the events and use those from code.

I intend to fix all of the above in this article. Once and for all ;)

Configuration

If you have worked a bit with the MRTK2 before, you know what's going to follow: cloning profiles, cloning profiles and more cloning profiles. We are going some four levels deep. Don't shoot the messenger ;)

Assuming you start with a blank Unity app with the MRTK2 imported, first step is of course to clone the Default profile - or whatever profile you wish to start with, by clicking Copy & customize.

While you are at it, turn off the diagnostics

Next step is to clone the Input System Profile. You might need to drag the inspector a bit wider or you won't see the Clone button

Step 3 is to clone the Controller Mapping Profile:

Expand the "Controller Definitions" section. If you then select Windows Mixed Reality Left Hand Controller, you will notice a lot of events are filled in for the various controls - but also that a couple are not:

You can select something, but it's not applicable or already assigned to something else. The missing events are:

- Touchpad Press

- Touchpad Position

- Touchpad Touch

- Trigger Touch

- Thumbstick Press

So we have to add these events. To achieve this, we have to do one final clone: the Default Input Actions Profile.

And then you simply can add the five missing events (or input actions, as they are called in MRKT2 lingo).

Mind to select "Digital" for all new actions except for Touchpad position. Make that a "Dual Axis". That last one will be explained later.

Now you can once again go back to Input/Controller/Input Mappings settings, and, assign the proper (new) events to the controller buttons. Don't forget to do this for both the right and the left hand controller.

And now finally there are events attached to all the buttons to the controllers. Now it's time to show how to trap them.

Understanding and using the events

The important thing to understand is that there are different kind of events, that all need to be trapped in a specific way. When I showed you to add the event types, all but one of them were digital types. Only one was "Dual Axis". There actually are a lot of different types of events:

I am not sure if I got all the details right, but this is what I found out:

- a digital event, that's basically a click. You need to have a behaviour that implements IMixedRealityInputHandler to intercept this. Example: a click on the menu button

- a single axis event is an event that gives you a single value. The only application for WMR controllers I have found is a way to determine how far the trigger is pushed inwards (on a scale of 0-1). You will need to implement IMixedRealityInputHandler<float>

- a dual axis event gives you two values. The only application I found was the touchpad - it gives to the X,Y coordinates where the touchpad was touched. Range for both is -1 to 1. 0,0 is the touchpad's center. You will need to implement IMixedRealityInputHandler<Vector2>

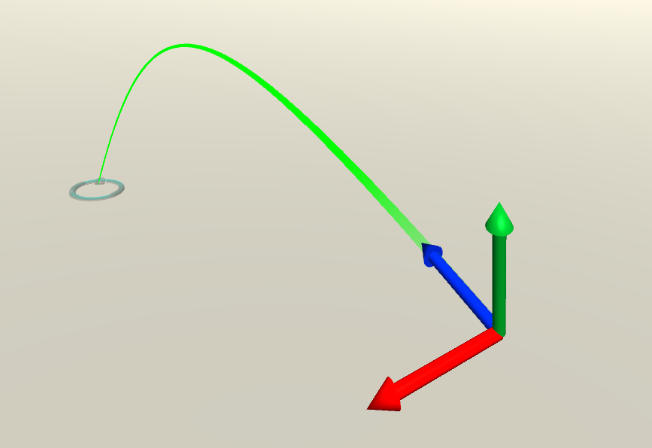

- a six dof (degrees of freedom) event will give you a MixedRealityPose. This enables you to determine the current grip and pointer pose of the controller. You will need to implement IMixedRealityInputHandler<MixedRealityPose>

Demo application

I created an application that demonstrates the events you will get and what type they are. If available, it will also display the values associated with the event. It looks like this:

Not very spectacular, I'll admit, but it does the trick. On the top row it displays the type of event intercepted, the bottom two rows show actual events with - in four cases - information associated with the events. When activated: the red circle will turn green.

Observations using the demo app

- You will notice you'll get a constant stream of Grip Pose and Pointer Pose events - hence these two events and the MixedRealityPose type events indicator are always green

- You will also get a constants stream of "Teleport Direction" events (of type Vector2) from the thumbstick even if you don't touch it. I have no idea why this is so. I had to filter those out, or else the fact Touchpad position is a Vector2 element got hidden in the noise.

- Grip press is supposed to be a SingleAxis event, but only fires Digital events

- If you touch the touchpad, it actually fires two events simultaneously - the Digital Touchpad Touch and the Vector2 Touchpad position.

- Consequently, if you press the touchpad, you get three events - Touchpad touch, Touchpad Position and Touchpad Press.

- The trigger button also is an interesting story as that fires three events as well. As soon start to press it ever so slightly, it fires the SingleAxis event "Trigger" that tells you how far the trigger is depressed. But at the lowest scale where "Trigger" registers, it will also fire the Digital "Trigger Touch" event. However, you will usually get a lot more "Trigger" events as it's very hard to keep the trigger perfectly still while it's halfway depressed.

- And finally, when you fully press it, the Digital "Select" event will be fired.

- Menu and Thumbstick press are simple Digital events as you would expect.

Key things to learn from the demo app

Registering global handlers

On top you will see the the ControllerInputHandler implementing being derived from BaseInputHandler and the four interfaces mentioned.

public class ControllerInputHandler : BaseInputHandler,

IMixedRealityInputHandler, IMixedRealityInputHandler<Vector2>,

IMixedRealityInputHandler<float>,

IMixedRealityInputHandler<MixedRealityPose>The important thing to realize is that this behaviour needs to handle global events. This implicates two things, first, you will have to register global handlers

protected override void RegisterHandlers()

{

CoreServices.InputSystem?.RegisterHandler<IMixedRealityInputHandler>(this);

CoreServices.InputSystem?.RegisterHandler<IMixedRealityInputHandler<Vector2>>(this);

CoreServices.InputSystem?.RegisterHandler<IMixedRealityInputHandler<float>>(this);

CoreServices.InputSystem?.RegisterHandler<IMixedRealityInputHandler<MixedRealityPose>>(this);

};(and unregister them of course in UnregisterHandlers)

but second, if you use this in Unity, uncheck the "Is Focus Required" checkbox

This will ensure the global handlers being registered properly and being intercepted by this controller.

Discriminating between events of same type

It might not be immediately clear, but the only way I have been able to determine what exact event I get is to check it's MixedRealityInputAction.Description property. In the code you will seen things like

var eventName = eventData.MixedRealityInputAction.Description.ToLower();

if (eventName == "touchpad position")

In fact, you will see that the names of the event displayers in Scene hierachy are bascially the names of the events without spaces. I simply find them by name

After I simply load them in a dictionary in Start by looking for children in the "Events" object

foreach (var controller in _eventDisplayParent.GetComponentsInChildren<SingleShotController>())

{

_eventDisplayers.Add(controller.gameObject.name.ToLower(), controller);

}

I simply find the back by looking in that dictionary and activating the SingleShot Controller. This class is part of a prefab that

I used and explained in an earlier post.

private void ShowEvent(string eventName)

{

var controller = GetControllerForEvent(eventName);

if (controller != null)

{

controller.ShowActivated();

}

}

private SingleShotController GetControllerForEvent(string controllerEvent)

{

return _eventDisplayers[controllerEvent.ToLower().Replace(" ", "")];

}I must say I feel a bit awkward about having to use strings to determine events by name. I guess it's inevitable if you want to be able to support multiple platforms and be able to add and modify events without actually having to change code and introduce types. This flexibility is what the MRTK2 intends to support, but it still feels weird.

Combining events

In the Immersive headset version of Walk the World you can zoom in or out by pressing on top of at the bottom at of the touch pad. But as we have seem, it's not even possible to detect where the user has pressed, only that he has pressed. But we can detect where he last touched, which most likely is at or very near where he last touched. How you can combine these the touch and press events to deserve and effect like I just described, is showed in the relevant pieces of the demo project code that copied below:

Vector2 _lastpressPosition;

public void OnInputChanged(InputEventData<Vector2> eventData)

{

var eventName = eventData.MixedRealityInputAction.Description.ToLower();

if (eventName == "touchpad position")

{

_lastpressPosition = eventData.InputData;

}

}

public void OnInputDown(InputEventData eventData)

{

var eventName = eventData.MixedRealityInputAction.Description.ToLower();

if (eventName == "touchpad press")

{

// Limit event capture to only when more or less the top or bottom

// of the touch pad is pressed

if (_lastpressPosition.y < -0.7 || _lastpressPosition.y > 0.7)

{

ShowEvent(eventName);

}

}

}First, the touchpad position event keeps the last position into a member variable, then when the touchpad is pressed, we check where it last was touched. The event is only fired when the front 30% or back 30% was last touched before it was pressed. If you press the sides (or actually, touch the side before you press) nothing happens.

Conclusion

Interacting with the controller has changed quite a bit since ye olde days of the HoloToolkit, but it still is pretty much doable and usable if you follow the rules and patterns above. I still find it odd I have to determine what event is fired by checking it's description, but I may just be missing something. However, this method works for me, at least in my application.

Also, I am a bit puzzled by the almost-the-same-but-not-quite-so events around trigger and touchpad. No doubt some serious considerations have been made implementing it like this, but not having been around while that happens, it leaves confused about the why.

Finally, bear in mind you usually don't have to trap Select manually, and neither is the Thumbstick ('Teleport Direction') usually very interesting as those events are handled by the environment by default - the only reason I showed they them here was to demonstrate you could actually intercept them.

Demo project, as always, here on GitHub.

A short one, but one that took me quite some time to find.

A short one, but one that took me quite some time to find.

If you are developing an app using the

If you are developing an app using the

Menu structure

Menu structure

Although the focus for

Although the focus for