Intro

HoloLens is cool, Machine Learning is cool, what's more fun than combine these two great techniques. Very recently you could read "Back to the future now: Execute your Azure trained Machine Learning models on HoloLens!" on the AppConsult blog, and as early as last May my good friend Matteo Pagani wrote on the same blog about his very first experiments with WindowsML - as the technology to run machine learning models on your Windows ('edge') devices is called. Both of the blog posts use an Image Classification algorithm, which basically tells you whether or not an object is in the image, and what the confidence level of this recognition is.

And then this happened:

Now things are getting interesting. I wondered if I could use this technique to detect objects in the picture and then use HoloLens' depth camera to actually guestimate where those object where in 3D space.

The short answer: yes. It works surprisingly good.

The global idea

- User air taps to initiate the process

- The HoloLens takes a quick picture and uploads the picture to the Custom Vision API

- HoloLens gets the recognized areas back

- Calculates the center of each area with a confidence level < 0.7

- 'Projects' these centers on a plane 1 m wide and 0.56 high that's 1 meter in front of the Camera (i.e. the user's viewpoint)

- 'Shoots' rays from the Camera through the projected center points and checks if and where the strike the Spatial Map

- Places labels on the detected points (if any).

Part 1: creating and training the model

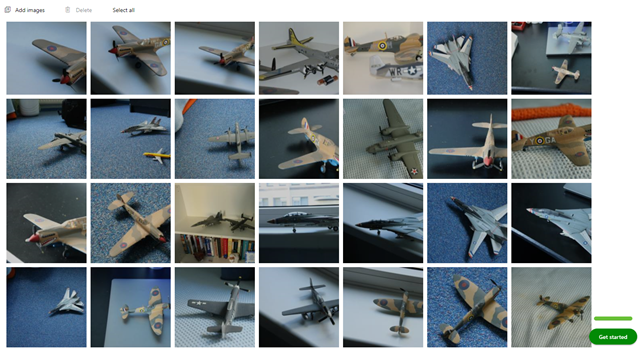

Matteo already wrote about how simple it actually is to create an empty model in CustomVision.ai so I skip that part. Inspired by his article I wanted to recognize airplanes as well, but I opted for model airplanes - much easier to test with than actual airplanes. So I dusted off all the plastic airplane models I had built during my late teens - this was a thing shy adolescent geeks like me sometimes did, back in the Jurassic when I grew up ;) - it helped we did not have spend 4 hours per day on social media ;). But I digress. I took a bunch of pictures of them:

And then, picture by picture, I had to mark and label the areas which contains the desired objects. This is what is different from training a model for 'mere' object classification: you have to mark every occasion of your desired object.

This is very easy to do, it's a bit boring and repetitive, but learning stuff takes sacrifices, and in the end I had quite an ok model. You train in it just the same way as Matteo already wrote about - by hitting big green 'Train' button that's kind of hard to miss on the top right.

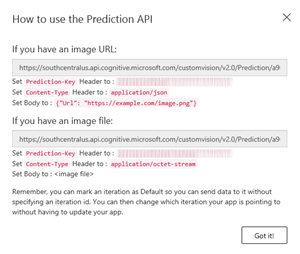

When you are done, you will need two things:

- The Prediction URL

- The Prediction key.

You can get those by clicking the "Performance" tab on top:

Then click the "Prediction URL" tab

And this will make this popup appear with the necessary information

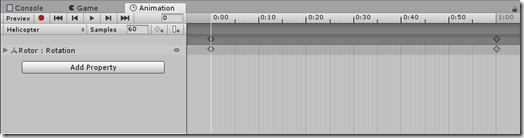

Part 2: Building the HoloLens app to use the model

Overview

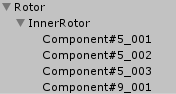

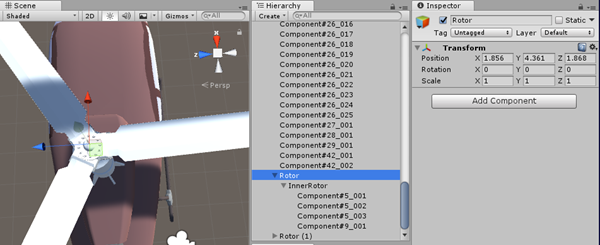

The app is basically using three main components:

- CameraCapture

- ObjectRecognizer

- ObjectLabeler

They sit in the Managers object and communicate using the Messenger that I wrote about earlier.

Part 2a: CameraCapture gets a picture - when you air tap

So what you basically see is that CameraCapture takes a picture in a format based upon whether or not the DebugPane is present:

pixelFormat = _debugPane != null ? CapturePixelFormat.BGRA32 : CapturePixelFormat.JPEG

and then either directly copies the captured (JPEG) photo into the photoBuffer, or it shows in on the DebugPane and as BRA32 and converts it to JPEG from there

void OnCapturedPhotoToMemory(PhotoCapture.PhotoCaptureResult result,

PhotoCaptureFrame photoCaptureFrame) { var photoBuffer = new List<byte>(); if (photoCaptureFrame.pixelFormat == CapturePixelFormat.JPEG) { photoCaptureFrame.CopyRawImageDataIntoBuffer(photoBuffer); } else { photoBuffer = ConvertAndShowOnDebugPane(photoCaptureFrame); } Messenger.Instance.Broadcast( new PhotoCaptureMessage(photoBuffer, _cameraResolution, CopyCameraTransForm())); // Deactivate our camera _photoCaptureObject.StopPhotoModeAsync(OnStoppedPhotoMode); }

The display and conversion is done this way:

private List<byte> ConvertAndShowOnDebugPane(PhotoCaptureFrame photoCaptureFrame)

{

var targetTexture = new Texture2D(_cameraResolution.width,

_cameraResolution.height);

photoCaptureFrame.UploadImageDataToTexture(targetTexture);

Destroy(_debugPane.GetComponent<Renderer>().material.mainTexture);

_debugPane.GetComponent<Renderer>().material.mainTexture = targetTexture;

_debugPane.transform.parent.gameObject.SetActive(true);

return new List<byte>(targetTexture.EncodeToJPG());

}It creates a texture, uploads the buffer into it, destroys the current texture and sets the new texture. Then the object game object is actually being displayed, and then it's used to convert the image to JPEG

Either way, the result is a JPEG, and the buffer contents are sent on a message, together with the camera resolution and a copy of the Camera's transform. The resolution we need to calculate the height/width ratio of the picture, and the transform we need to retain because in between the picture being taken and the result coming back the user may have moved. Now you can't just send the Camera's transform, when the user moves. So you have to send a 'copy', which is made by this rather crude method, using a temporary empty gameobject:

private Transform CopyCameraTransForm()

{

var g = new GameObject();

g.transform.position = CameraCache.Main.transform.position;

g.transform.rotation = CameraCache.Main.transform.rotation;

g.transform.localScale = CameraCache.Main.transform.localScale;

return g.transform;

}Part 2b: ObjectRecognizer sends it to CustomVision.ai and reads results

The ObjectRecognizer is, apart from some song and dance to pick the message apart and start a Coroutine, a fairly simple matter. This part does all the work:

private IEnumerator RecognizeObjectsInternal(IEnumerable<byte> image,

Resolution cameraResolution, Transform cameraTransform)

{

var request = UnityWebRequest.Post(_liveDataUrl, string.Empty);

request.SetRequestHeader("Prediction-Key", _predictionKey);

request.SetRequestHeader("Content-Type", "application/octet-stream");

request.uploadHandler = new UploadHandlerRaw(image.ToArray());

yield return request.SendWebRequest();

var text = request.downloadHandler.text;

var result = JsonConvert.DeserializeObject<CustomVisionResult>(text);

if (result != null)

{

result.Predictions.RemoveAll(p => p.Probability < 0.7);

Debug.Log("#Predictions = " + result.Predictions.Count);

Messenger.Instance.Broadcast(

new ObjectRecognitionResultMessage(result.Predictions,

cameraResolution, cameraTransform));

}

else

{

Debug.Log("Predictions is null");

}

}

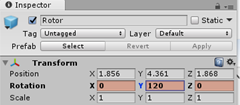

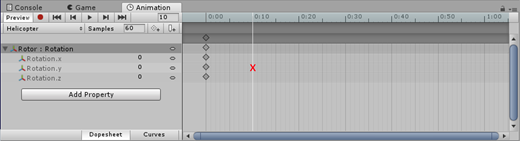

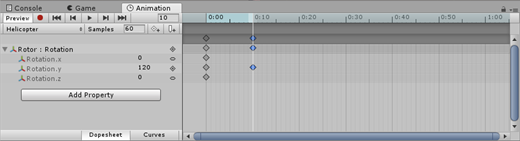

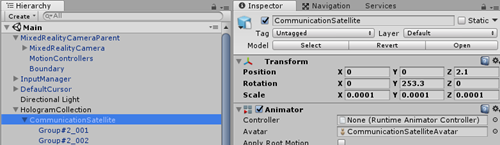

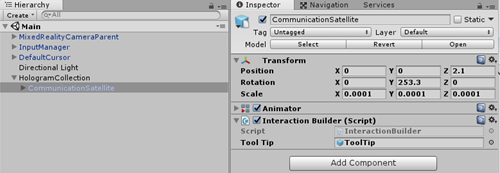

You will need to set _liveDataUrl and predictionKey values via the editor, as you could see in the image just below the Part 2a header. This behaviour creates a web request to the prediction URL, adds the prediction key as header, and the right content type. The body content is set to the binary image data using an UploadHandlerRaw. And then the request is sent to CustomVision.ai. The result is then deserialized into a CustomVisionResult object, all the predictions with a probability lower than the 0.7 threshold are removed, and the predications are put back into a message, to be sent to the ObjectLabeler, together once again with the camera's resolution and transform.

A little note: the CustomVisionResult together with all the classes it uses are in the CustomVisionResult.cs file in the demo project. This code was generated by first executing executing the SendWebRequest and then copying the raw output of "request.downloadhandler.text" into QuickType. It's an ideal site to quickly make classes for JSON serialization.

Interestingly to note here is that Custom Vision returns bounding boxes by giving top,left, width and height - in values that are always between 0 and 1. So if the top/left of your picture sits at (0,0) it's all the way to the top/left of the picture, and (1,1) is a the bottom right of the picture. Regardless of the height/with ratio of your picture. So if your picture is not square (and most cameras don't create square pictures)) you need to know the actual width and height of your picture - that way, you can calculate what pixel coordinates actually correspond to the numbers Custom Vison returns. And that's exactly what the next step does.

Part 2c: ObjectLabeler shoots for the Spatial Map and places labels

The ObjectLabeler also contains pretty little code as well, although the calculations may need a bit of explanation. The central piece of code is this method:

public virtual void LabelObjects(IList<Prediction> predictions,

Resolution cameraResolution, Transform cameraTransform)

{

ClearLabels();

var heightFactor = cameraResolution.height / cameraResolution.width;

var topCorner = cameraTransform.position + cameraTransform.forward -

cameraTransform.right / 2f +

cameraTransform.up * heightFactor / 2f;

foreach (var prediction in predictions)

{

var center = prediction.GetCenter();

var recognizedPos = topCorner + cameraTransform.right * center.x -

cameraTransform.up * center.y * heightFactor;

var labelPos = DoRaycastOnSpatialMap(cameraTransform, recognizedPos);

if (labelPos != null)

{

_createdObjects.Add(CreateLabel(_labelText, labelPos.Value));

}

}

if (_debugObject != null)

{

_debugObject.SetActive(false);

}

Destroy(cameraTransform.gameObject);

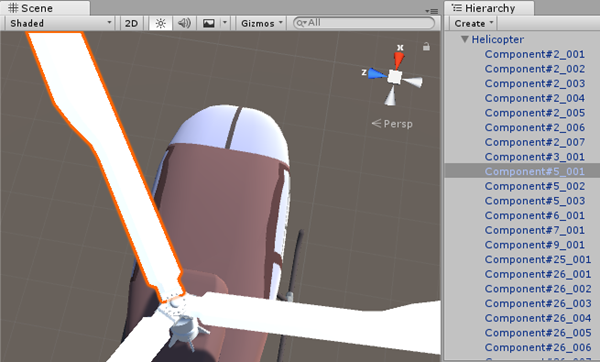

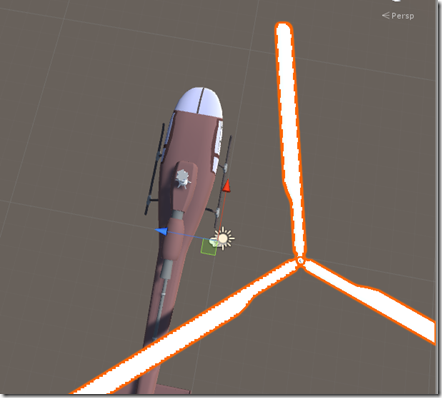

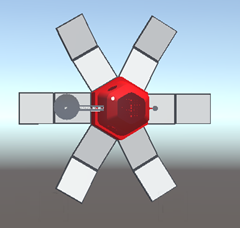

}First, we clear any labels that might have been created in a previous run. Then we calculate the height/width ratio of the picture (this is 2048x1152, so heightFactor will always be 0.5625, but why hard code something that can be calculated). Then comes the first interesting part. Remember that I wrote we are projecting the picture on a plane 1 meter before the user. We do this because the picture then looks pretty much live sized. So we need to go forward 1 meter from the camera position:

cameraTransform.position + cameraTransform.forward.normalized

But then we end up in the center of the plane. We need to get to the top left corner as a starting point. So we go half a meter to the left (actually, -1 * right, which amounts to left), then half the height factor up.

cameraTransform.up * heightFactor / 2f

In image, like this:

Once we are there, we calculate the center of the prediction using a very simple extension method:

public static Vector2 GetCenter(this Prediction p)

{

return new Vector2((float) (p.BoundingBox.Left + (0.5 * p.BoundingBox.Width)),

(float) (p.BoundingBox.Top + (0.5 * p.BoundingBox.Height)));

}To find the actual location on the image, we basically use the same trick again in reverse: first move to the right the amount the x is from the top corner

var recognizedPos = topCorner + cameraTransform.right * center.x

And then a bit down again (actually , -up) using the y value scaled for height.

-cameraTransform.up * center.y * heightFactor;

Then we simply do a ray cast to the spatial map from the camera position through the location we calculated, basically shooting 'through' the picture for the real object.

private Vector3? DoRaycastOnSpatialMap(Transform cameraTransform,

Vector3 recognitionCenterPos)

{

RaycastHit hitInfo;

if (SpatialMappingManager.Instance != null &&

Physics.Raycast(cameraTransform.position,

(recognitionCenterPos - cameraTransform.position),

out hitInfo, 10, SpatialMappingManager.Instance.LayerMask))

{

return hitInfo.point;

}

return null;

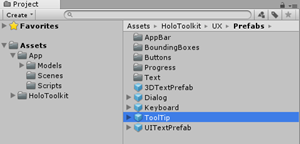

}and create the label at the right spot. I copied the code for creating the label from two posts ago, so I will skip repeating that here.

There is little bit I want to repeat here

if (_debugObject != null)

{

_debugObject.SetActive(false);

}

Destroy(cameraTransform.gameObject);

If the debug object is set (that is to say, the plane showing the photo HoloLens takes to upload) it will be turned off here otherwise it obscures the actual labels. But more importantly is the last line: I created the copy of the camera's transform using a temporary game object. As the user keeps on shooting pictures those will add up and clutter the scene. So after the work is done, I clean it up.

And the result...

The annoying thing is, al always, I can't show you a video the whole process as any video recording stops as soon as the app takes a picture. So the only think I can show you is this kind of doctored video - I restarted video immediately after taking the picture, but I miss the part of where the actual picture is floating in front of the user. This is how it looks like, though, if you disable the debug pane from the Camera Capture script:

Lessons learned

- There is a reason why Microsoft says you need at least 50 pictures for a bit reliable recognition. I took about 35 pictures of about 10 different models of airplanes. I think I should have take more like 500 pictures (50 of every type of model airplanes) and then things would have gone a lot better. Nevertheless, it already works pretty well

- If the camera you use is pretty so-so (exhibit A: the HoloLens built-in video camera) it does not exactly help if your training pictures are made with a high end DSLR, which shoots in great detail, handles adverse lighting conditions superbly, and never, ever has a blurry picture.

Conclusion

Three simple objects to call a remote Custom Vision Object Recognition Machine Learning model and translate its result into a 3D label. Basically a Vuforia-like application but then using 'artificial intelligence' I love the way how Microsoft are taking the very thing they really excel in - democratizing and commoditizing complex technologies into usable tools - to the Machine Learning space.

The app I made is quite primitive, and it's also has a noticeable 'thinking moment' - since the model lives in the cloud and has to be accessed via a HTTP call. This is because the model is not a 'compact' model, therefore it's not downloadable and it's can't run on WindowsML. Wel will see what the future has in store for these kinds of models. But the app shows what's possible with these kinds of technologies, and it makes the prospect of a next version of HoloLens having an AI coprocessor all the more exiting!

Demo project - without the model, unfortunately - can be downloaded here.