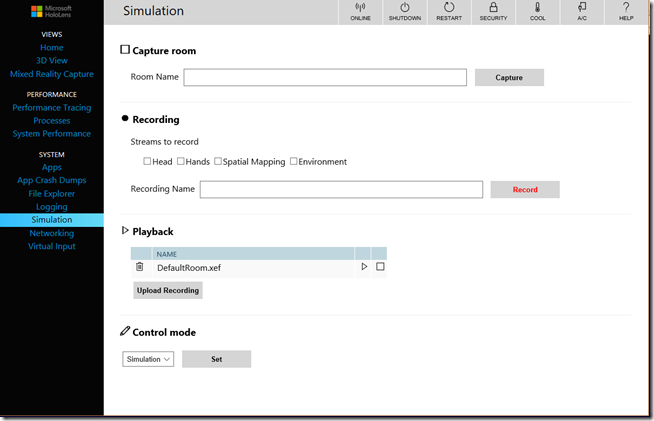

HoloLens can interact with reality – that’s why it’s a mixed reality device, after all. The problem is, sometimes, the reality you need is not always available. For example, if the app needs to run in a room or facility at a (client) location you only have limited access to. And you have to locate stuff on places relative to places in the room. Now you can of course use the simulation in the device portal and capture the room.

You can save the room into an XEF file and upload that to (another) HoloLens. That works fine runtime, but in Unity that doesn’t help you much with getting a feeling of the space, and moreover, it messes with your HoloLens’ spatial mapping. I don’t like to use it on a real live HoloLens.

There is another option though, in the 3D view tab

If you click “update”, you will see a rendering of the space the HoloLens has recognized, belonging to the ‘space’ it finds itself in. Basically, the whole mesh. In this case, my ground and first floor of my house (I never felt like taking the HoloLens to 2nd floor). If you click “Save” it will offer to save a SpatialMapping.obj. That simple WaveFront Object format. And this is something you actually can use in Unity.

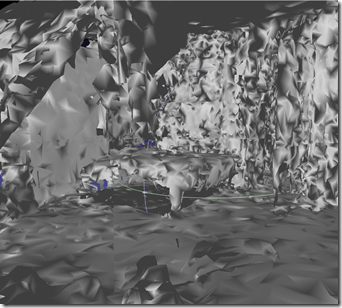

Only it looks rather crappy. Even if you know what you are looking at. This is the side of my house, with left bottom the living (the rectangular thing is the large cupboard), on top of that the master bedroom* with the slanted roof, and if you look carefully, you can see the stairs coming up from the hallway a little right of center, at the bottom of the house.

What is also pretty confusing it the fact meshes can have only one side. This has the peculiar effect that at a lot of places you can look into the house from outside, but not outside the house from within. Anyway. This mesh is way too complex (the file is over 40mb) and messy.

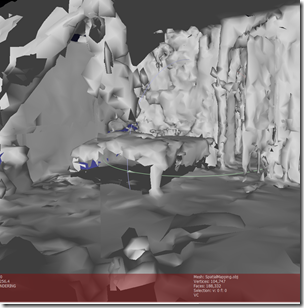

Meshlab has quite some tools to make your Mesh a bit smoother. Usually, when you look at a piece of mesh, like for instance the master bedroom, it looks kinda spikey – see left. But after choosing Filter/Remeshing, Simplication and Reconstruction/Simplification: Quadratic Edge Collapse Decimation

My house starts to look at lot less like the lair of the Shrike – it’s more like an undiscovered Antonio Gaudi building now. Hunt down the material used (in the materials subfolder), set it to transparent and play with color and transparency. I thought this somewhat transparent brown worked pretty well. Although there’s definitely still a lot of ‘noise’, it now definitely looks like my house, good enough for me to know where things are – or ought to be.

Using this Hologram of a space you can position Kinect-scanned objects or 3d models relative to each other based upon their true relative positions without actually being in the room. Then, when you go back to the real room, all you have to to is to make sure the actual room coincides with the scanned room model – add world anchors to the models inside the room, and then get rid of the room Hologram. Thus, you can use this virtual room as a kind of ‘staging area’, which I successfully did for a client location to which physical access is very limited indeed.

You might notice a few odd things – there are two holes in the floor in the living room – that is where the black leather couch and the black swivel chair are. As I’ve noticed before, black doesn’t work well with the HoloLens spatial mapping. Fascinating I find also the rectangular area that seems to float about a meter from left side of the house. That’s actually a large mirror that hangs on the bedroom wall, but the HoloLens spatial mapping apparently sees it as a reclined area. Very interesting. So not only this gives you a view of my house, but also a bit about HoloLens quirks.

The project showed above, with both models (the full and the simplified one) in it, can be found here.

* I love using the phrase “master bedroom” in relation to our house – as it conjures up images of a very large room like found in a typical USA suburban family house. I can assure you neither our house nor our bedroom do justice to that image. This is a Dutch house. But is is made out of concrete, unlike most houses in the USA, and will probably last way beyond my lifespan

7 comments:

Hi

can you give me more explanation of the position of your anchor !

I try to achieve a showroom for a client but the anchor are relative of the starting position and orientation of the hololens.

I want to place my holograms in unity and when i start my application, the holograms are in place wherever i start my application

Thank you

I am not really using an anchor in this sample. What you need to do is:

* Place all objects so they are in the right place

* Create anchors for either all objects, or place the objects inside an empty gameobject and anchor that

* Wait a little - the anchor will get more detailed in time

* Store the anchor in local storage

* Next time, when the app starts, you can retrieve the anchor(s) and apply them to the objects

Using a scanned room can NOT be used to generate anchors off-site. I have tried that road before, and it does not work

>>>“Using a scanned room can NOT be used to generate anchors off-site. I have tried that road before, and it does not work”

Do you mean that it is NOT possible to define the relative position of an object to a scanned 'SpacitialMap' of the room within Unity editor. The creation of the world anchor has to be done in the runtime?

Yes. I am afraid that is the case

Ok, so I have a scan of my room created while running a Unity application and adding empty anchors objects at runtime in key locations (e.g. corners, on a table, etc.). I can then bring the mesh into Unity and place objects relative to the space, but how do I make sure those objects align to the anchors? Is there a way to load up the app for the second time with new objects pinned to old anchors?

Thank you!

Hi Unknown,

What you need to do is use at least two anchors - one as an origin and one as a direction point. Both those points need to be known inside the mesh. Then you can move both origin points together and rotate towards the second point

Thank you so much Joost! This is exactly the solution I was looking for. Brilliant.

- kotavy

Post a Comment